Introduction

In our increasingly connected world, massive amounts of data are generated every second—from smart devices in homes and industries, sensors in vehicles, to video streams and wearable health monitors. The traditional method of sending all data to distant cloud data centers for processing introduces delays (latency), bandwidth bottlenecks, and sometimes privacy concerns. Enter edge computing—a paradigm that brings computation and data storage closer to where they’re needed, at the “edge” of the network. It’s transforming how we think about speed, efficiency, and responsiveness in applications such as IoT (Internet of Things), autonomous vehicles, and real-time analytics. In this article, you will learn what edge computing is, how it works, its benefits and challenges, and where it’s headed—all explained with clarity and backed by trusted sources, so you understand both the technical side and why it’s becoming essential in many industries.

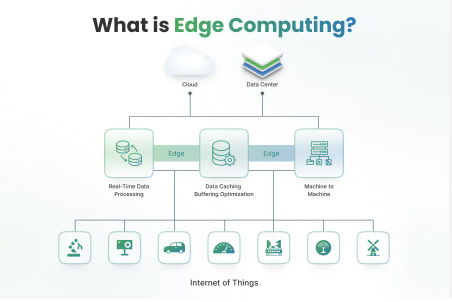

What is Edge Computing? Definition & Core Concept

Edge computing is a distributed computing architecture in which data processing and storage occur closer to the source of data generation, rather than relying exclusively on centralized cloud servers.

Here are its key aspects:

- Proximity to Data Source: Processing happens on devices (edge devices), gateways, or local servers nearby data sources—factories, vehicles, sensors, stores, health-monitors etc.

- Reduced Latency: Because data doesn’t have to travel long distances to central servers, response times are faster.

- Bandwidth Efficiency: Less data is sent over the network; only essential or aggregated results are transmitted to the cloud.

- Enabling Real-Time & Mission-Critical Applications: Useful in applications where immediate or near-immediate processing is required—autonomous driving, robotics, AR/VR, health monitoring.

How Edge Computing Works: Architecture & Process

To understand how edge computing functions, it helps to look at its architecture and workflow.

Architecture Components

- Edge Devices / Sensors: Devices that collect data—IoT sensors, cameras, mobile devices, industrial machines.

- Edge Gateways / Local Servers: These serve as localized compute units that perform pre-processing, filtering, aggregation, or immediate analytics close to where the data is generated.

- Edge Data Centers / Micro-Data Centers / Cloudlets: Small-scale data centers near the edge. These host more powerful compute resources than local edge devices but are still much nearer than centralized cloud centers.

- Core Cloud Infrastructure: For heavier processing, long-term storage, or cross-domain analytics, data is eventually transmitted to centralized cloud servers. Edge and cloud often work together.

Workflow / Process Flow

- Data Generation & Capture: Edge devices gather raw data continuously.

- Local Processing & Filtering: The raw data is analyzed at the edge device or gateway. Non-critical data may be discarded or summarized.

- Immediate Decision / Action: If something requires fast reaction (e.g. safety alert, anomaly detection), it’s handled locally without cloud round trip.

- Sending Critical Data to Cloud: Only processed, essential data or aggregated metrics are sent to central cloud servers for further analysis, long-term storage, or model training.

- Feedback & Updates: Insights from cloud processing (e.g., improved models, global patterns) may be pushed back to edge devices to improve performance.

Key Terms & Related Concepts (LSI Keywords)

To help clarify related ideas and terms you may encounter, here are some relevant concepts:

- Fog computing: Similar to edge but often refers to a layer between edge devices and cloud, used for intermediate processing.

- Latency / Low latency: Delay between an input being processed and its corresponding output—edge computing aims to minimize this.

- Bandwidth consumption / Bandwidth efficiency: Amount of data transferred across network; less data transfer = lower bandwidth usage.

- Distributed computing / Distributed architecture: Spreading compute and storage tasks across many nodes rather than a single central server.

- Real-time analytics: Processing data instantly to generate insights or actions with minimal delay.

- Edge AI: Artificial Intelligence models running at the edge, rather than in the cloud.

- Multi-access Edge Computing (MEC): Edge computing implemented in network access points (e.g., telecom base stations) to serve mobile devices.

Benefits of Edge Computing

Edge computing provides many advantages, especially for use-cases demanding high responsiveness or dealing with large volumes of data at many distributed sources:

- Reduced Latency & Faster Response Times

Since processing is nearer the source, decisions can be made quickly. Critical for applications like autonomous vehicles, industrial automation, AR/VR. - Lower Bandwidth and Transmission Costs

Only necessary or processed data is sent across networks, saving on bandwidth and costs. - Improved Reliability & Resilience

If connectivity to the cloud is lost or degraded, edge systems can still operate in a degraded but functional mode. - Enhanced Privacy & Security

By keeping sensitive data local and limiting exposure over networks, edge computing can offer privacy advantages. - Scalability & Efficiency

As IoT and connected devices proliferate, edge computing allows scaling by distributing processing loads. It also enables real-time analytics and action without overburdening the cloud.

Challenges and Considerations

Edge computing is powerful, but it comes with trade-offs and challenges:

- Infrastructure & Deployment Complexity

Setting up many local servers/devices, ensuring they’re maintained, updated, secure, with adequate hardware. - Security & Privacy Risks

More distributed nodes mean more potential attack vectors; ensuring secure communication, device authentication, and data privacy is tough. - Resource Constraints

Edge devices often have limited compute power, storage, energy, and may struggle with very intensive tasks or large AI models. - Management & Orchestration

Coordinating work between cloud and edge, distributing updates, handling failures, ensuring consistency or synchronization can be complex. - Cost and ROI

Initial investment in edge hardware, managing hardware in many locations, and ensuring the benefits outweigh the costs.

Real-World Use Cases

Here are some scenarios where edge computing shines:

| Sector | Use Case | Benefit |

| Autonomous Vehicles & Transport | On-vehicle processing of sensors for real-time navigation, collision avoidance | Ultra low latency needed; safety depends on immediate decision-making. |

| Industrial IoT / Smart Manufacturing | Monitoring machines for anomalies; local predictive maintenance | Reduces downtime; faster detection yields lower costs. |

| Healthcare / Telemedicine | Wearables or medical devices processing vital signs locally before sending alerts | Allows fast reaction in emergencies; helps with patient privacy. |

| Smart Cities / Public Safety | Real-time video analytics, traffic monitoring, emergency response | Minimizes data transfer; enables timely responses and public safety. |

| Retail / Edge at Stores | Local processing for payment, personalization, facial recognition, inventory tracking | Improves customer experience; reduces reliance on distant cloud servers; ensures low latency. |

| 5G & MEC | Using 5G base stations with edge compute capability to support high bandwidth, low latency apps like AR, VR, streaming | Enhances speed, reduces lag in immersive applications. |

Edge vs Cloud vs Fog: Where They Differ

- Cloud Computing: Data is sent to centralized data centers; excellent for large-scale storage, heavy analytics, non-latency-sensitive tasks.

- Fog Computing: A middle layer; sometimes seen as extending cloud features closer to edge devices, distributing some compute but not entirely on devices themselves. More hierarchical.

- Edge Computing: Puts compute as close as possible to the data source (or even on the device itself), minimizing delay, often handling real-time processing.

Deciding which approach to use depends on requirements like latency tolerance, security, cost, data volume, and location.

Emerging Trends & Future Directions

- Edge AI / Edge Machine Learning: Models that run locally, including training or inference, bringing intelligence closer to devices.

- More Integration with 5G / MEC: As 5G networks roll out, MEC becomes an important enabler to bring edge computing to telecom infrastructure.

- Better Security Frameworks for Edge: Stronger encryption, secure device identity, privacy-preserving techniques.

- Hybrid & Multi-Edge Models: Edge + Cloud working together more seamlessly; intelligently deciding which tasks run where.

- Energy Efficiency & Sustainable Edge Infrastructure: Including using renewable energy, optimizing compute to reduce power.

Conclusion

Edge computing is reshaping how we think about data, computation, and how quickly useful insights can be gained. By processing data closer to its source—on devices, local servers or gateways—this paradigm addresses key limitations of centralized cloud models, especially latency, bandwidth costs, and privacy concerns. While edge computing presents challenges like infrastructure complexity, security, and resource constraints, its benefits for real-time applications in IoT, autonomous systems, healthcare, smart cities, and more, make it an essential part of the future tech landscape.

Looking ahead, innovations such as Edge AI, Multi-access Edge Computing (MEC), better security protocols, and sustainable designs will continue to accelerate adoption. For organizations deciding whether to implement edge computing, the key is assessing specific needs—how fast responses must be, how sensitive the data is, and whether the investment in edge hardware and management makes sense. Edge computing isn’t replacing cloud computing—it complements it, creating a powerful hybrid ecosystem that delivers speed, efficiency, and responsiveness for modern applications.

FAQs (People Also Ask)

- What exactly is edge computing in simple terms?

Edge computing means processing data close to where it originates (on devices or local servers), instead of sending it all to a central cloud. This reduces delays, conserves network bandwidth, and enables faster, real-time responses. - How is edge computing different from cloud computing?

Cloud computing centralizes processing and storage in large data centers, often far away, while edge computing happens near the data source. Cloud is great for heavy analytics and large-scale storage; edge is essential for low latency, localized processing, and immediate insights. - What are the main benefits of using edge computing?

Key benefits include lower latency (faster response), reduced bandwidth use (since less data is sent over networks), improved reliability (able to operate even with poor network connection), and enhanced privacy/security (keeping sensitive data local). - What are the common challenges of edge computing deployment?

Challenges include ensuring security across many distributed nodes, managing hardware in diverse locations, optimizing resource-constrained devices, orchestrating data and workloads between edge and cloud, and making sure the investment delivers return. - In which industries or applications is edge computing most useful?

Edge computing is especially beneficial in sectors like autonomous vehicles, industrial automation, health care (medical devices, wearables), smart cities (traffic monitoring, public safety), augmented/virtual reality, and any scenario where real-time decision making is critical or bandwidth is limited.